“It is disconcerting to reflect on the number of students we have flunked in chemistry for not knowing what we later found to be untrue.” — Attributed to Deming by B.R. Bertramson, in Robert L. Weber, Science with a Smile, 1992

Wednesday, 30 April 2014

Science has come such a long way since the beginning of Time 2000 years ago

“It is disconcerting to reflect on the number of students we have flunked in chemistry for not knowing what we later found to be untrue.” — Attributed to Deming by B.R. Bertramson, in Robert L. Weber, Science with a Smile, 1992

Ignorance begets confidence , it's also a great defence against logic,reason and fact

The Dunning–Kruger effect is a cognitive bias which can manifest in one of two ways:

Dunnining and Kruger gave credit to Charles Darwin whom they quoted in their original paper ("ignorance more frequently begets confidence than does knowledge") The phenomenon was first tested in a series of experiments published in 1999 by David Dunning and Justin Kruger of the Department of Psychology, Cornell University.[2][3] The study was inspired by the case of McArthur Wheeler, a man who robbed two banks after covering his face with lemon juice in the mistaken belief that it would prevent his face from being recorded on surveillance cameras.[4] They noted earlier studies suggesting that ignorance of standards of performance is behind a great deal of incompetence. This pattern was seen in studies of skills as diverse as reading comprehension, operating a motor vehicle, and playing chess or tennis.

Dunning and Kruger proposed that, for a given skill, incompetent people will:

A follow-up study, reported in the same paper, suggests that grossly incompetent students improved their ability to estimate their rank after minimal tutoring in the skills they had previously lacked, regardless of the negligible improvement in actual skills.[2]

In 2003, Dunning and Joyce Ehrlinger, also of Cornell University, published a study that detailed a shift in people's views of themselves when influenced by external cues. Participants in the study, Cornell University undergraduates, were given tests of their knowledge of geography, some intended to affect their self-views positively, some negatively. They were then asked to rate their performance, and those given the positive tests reported significantly better performance than those given the negative.[8]

Daniel Ames and Lara Kammrath extended this work to sensitivity to others, and the subjects' perception of how sensitive they were.[9]

Research conducted by Burson et al (2006) set out to test one of the core hypotheses put forth by Kruger and Muller in their paper "Unskilled, unaware, or both? The better-than-average heuristic and statistical regression predict errors in estimates of own performance," "that people at all performance levels are equally poor at estimating their relative performance."[10] In order to test this hypothesis, the authors investigate three different studies, which all manipulated the "perceived difficulty of the tasks and hence participants’ beliefs about their relative standing."[11] The authors found that when researchers presented subjects with moderately difficult tasks that the best and the worst performers actually varied little in their ability to accurately predict their performance. Additionally, they found that with more difficult tasks, the best performers are less accurate in predicting their performance than the worst performers. The authors conclude that these findings suggest that "judges at all skill levels are subject to similar degrees of error."[12]

Ehrlinger et al. (2008) made an attempt to test alternative explanations, but came to qualitatively similar conclusions to the original work. The paper concludes that the root cause is that, in contrast to high performers, "poor performers do not learn from feedback suggesting a need to improve."[13]

Studies on the Dunning–Kruger effect tend to focus on American test subjects. A study on some East Asian subjects suggested that something like the opposite of the Dunning–Kruger effect may operate on self-assessment and motivation to improve.[14] East Asians tend to underestimate their abilities, and see underachievement as a chance to improve themselves and get along with others.

Geraint Fuller, commenting on the paper, noted that Shakespeare expressed similar sentiment in As You Like It ("The Foole doth thinke he is wise, but the wiseman knowes himselfe to be a Foole." (V.i)).[16

- Unskilled individuals suffer from illusory superiority, mistakenly rating their ability much higher than is accurate. This bias is attributed to a metacognitive inability of the unskilled to recognize their ineptitude.[1]

- Those persons to whom a skill or set of skills come easily may find themselves with weak self-confidence, as they may falsely assume that others have an equivalent understanding. See Impostor syndrome.

Dunnining and Kruger gave credit to Charles Darwin whom they quoted in their original paper ("ignorance more frequently begets confidence than does knowledge") The phenomenon was first tested in a series of experiments published in 1999 by David Dunning and Justin Kruger of the Department of Psychology, Cornell University.[2][3] The study was inspired by the case of McArthur Wheeler, a man who robbed two banks after covering his face with lemon juice in the mistaken belief that it would prevent his face from being recorded on surveillance cameras.[4] They noted earlier studies suggesting that ignorance of standards of performance is behind a great deal of incompetence. This pattern was seen in studies of skills as diverse as reading comprehension, operating a motor vehicle, and playing chess or tennis.

Dunning and Kruger proposed that, for a given skill, incompetent people will:

- tend to overestimate their own level of skill;

- fail to recognize genuine skill in others;

- fail to recognize the extremity of their inadequacy;

- recognize and acknowledge their own previous lack of skill, if they are exposed to training for that skill.[5]

If you’re incompetent, you can’t know you’re incompetent. […] the skills you need to produce a right answer are exactly the skills you need to recognize what a right answer is.

—David Dunning[7]

Supporting studies

Dunning and Kruger set out to test these hypotheses on Cornell undergraduates in psychology courses. In a series of studies, they examined the subjects' self-assessment of logical reasoning skills, grammatical skills, and humor. After being shown their test scores, the subjects were again asked to estimate their own rank: the competent group accurately estimated their rank, while the incompetent group still overestimated theirs. As Dunning and Kruger noted:Across four studies, the authors found that participants scoring in the bottom quartile on tests of humor, grammar, and logic grossly overestimated their test performance and ability. Although test scores put them in the 12th percentile, they estimated themselves to be in the 62nd.[2]Meanwhile, people with true ability tended to underestimate their relative competence. Roughly, participants who found tasks to be relatively easy erroneously assumed, to some extent, that the tasks must also be easy for others.[2]

A follow-up study, reported in the same paper, suggests that grossly incompetent students improved their ability to estimate their rank after minimal tutoring in the skills they had previously lacked, regardless of the negligible improvement in actual skills.[2]

In 2003, Dunning and Joyce Ehrlinger, also of Cornell University, published a study that detailed a shift in people's views of themselves when influenced by external cues. Participants in the study, Cornell University undergraduates, were given tests of their knowledge of geography, some intended to affect their self-views positively, some negatively. They were then asked to rate their performance, and those given the positive tests reported significantly better performance than those given the negative.[8]

Daniel Ames and Lara Kammrath extended this work to sensitivity to others, and the subjects' perception of how sensitive they were.[9]

Research conducted by Burson et al (2006) set out to test one of the core hypotheses put forth by Kruger and Muller in their paper "Unskilled, unaware, or both? The better-than-average heuristic and statistical regression predict errors in estimates of own performance," "that people at all performance levels are equally poor at estimating their relative performance."[10] In order to test this hypothesis, the authors investigate three different studies, which all manipulated the "perceived difficulty of the tasks and hence participants’ beliefs about their relative standing."[11] The authors found that when researchers presented subjects with moderately difficult tasks that the best and the worst performers actually varied little in their ability to accurately predict their performance. Additionally, they found that with more difficult tasks, the best performers are less accurate in predicting their performance than the worst performers. The authors conclude that these findings suggest that "judges at all skill levels are subject to similar degrees of error."[12]

Ehrlinger et al. (2008) made an attempt to test alternative explanations, but came to qualitatively similar conclusions to the original work. The paper concludes that the root cause is that, in contrast to high performers, "poor performers do not learn from feedback suggesting a need to improve."[13]

Studies on the Dunning–Kruger effect tend to focus on American test subjects. A study on some East Asian subjects suggested that something like the opposite of the Dunning–Kruger effect may operate on self-assessment and motivation to improve.[14] East Asians tend to underestimate their abilities, and see underachievement as a chance to improve themselves and get along with others.

Awards

Dunning and Kruger were awarded the 2000 satirical Ig Nobel Prize in Psychology for their paper, "Unskilled and Unaware of It: How Difficulties in Recognizing One's Own Incompetence Lead to Inflated Self-Assessments".[15]Historical references

Although the Dunning–Kruger effect was put forward in 1999, Dunning and Kruger have noted similar historical observations from philosophers and scientists, including Confucius ("Real knowledge is to know the extent of one's ignorance."),[3] Bertrand Russell ("One of the painful things about our time is that those who feel certainty are stupid, and those with any imagination and understanding are filled with doubt and indecision", see Wikiquote),[13] and Charles Darwin, whom they quoted in their original paper ("ignorance more frequently begets confidence than does knowledge").[2]Geraint Fuller, commenting on the paper, noted that Shakespeare expressed similar sentiment in As You Like It ("The Foole doth thinke he is wise, but the wiseman knowes himselfe to be a Foole." (V.i)).[16

The Myth of Multi Tasking or Sorry, I Killed You, I Just Had to make This Call

More is just more, not better.

In fact more is often less

Is it rude to be constantly checking messages while you're socializing with someone else?

That's a matter of opinion.

But a professor friend emails to remind me that rudeness is actually the least of the problems with the perpetual multitasking of the smartphone generation:

This is the way kids these days think. My administration calls it "the millennial student" and apparently we are supposed to cater to their habits. Fully half of my 60 person general physics class this semester sits in the back of the room on either phone or laptop. They're not taking notes. The good ones are working on assignments for other classes (as if being present in mine causes the information to enter their pores). The bad are giggling at Facebook comments.

....But here's the thing: there is convincing evidence that this inveterate multitasking has a serious, measurable and long lasting negative effect on cognitive function. Look up Stanford psychologist Clifford Nass sometime. There's a lovely episode of Frontline from a year or so ago featuring him. He has shown that multitaskers are not only bad at multitasking, but they are also worse than nonmultitaskers on every individual one of the tasks.

That's the millennial student and it isn't something to be catered to. Put the damn iPhone down before you make yourself stupid.

I should have remembered that! Nass has been studying "high multitasking" for years, and his results are pretty unequivocal. Here's the Frontline interview:

What did you expect when you started these experiments?

Each of the three researchers on this project thought that ... high multitaskers [would be] great at something, although each of us bet on a different thing.

I bet on filtering. I thought, those guys are going to be experts at getting rid of irrelevancy.

My second colleague, Eyal Ophir, thought it was going to be the ability to switch from one task to another. And the third of us looked at a third task that we're not running today, which has to do with keeping memory neatly organized. So we each had our own bets, but we all bet high multitaskers were going to be stars at something.

And what did you find out?

We were absolutely shocked. We all lost our bets. It turns out multitaskers are terrible at every aspect of multitasking. They're terrible at ignoring irrelevant information; they're terrible at keeping information in their head nicely and neatly organized; and they're terrible at switching from one task to another.

....We were at MIT, and we were interviewing students and professors. And the professors, by and large, were complaining that their students were losing focus because they were on their laptops during class, and the kids just all insisted that they were really able to manage all that media and still pay attention to what was important in class -- pick and choose, as they put it.

Does that sound familiar to you?

It's extremely familiar.... And the truth is, virtually all multitaskers think they are brilliant at multitasking. And one of the big new items here, and one of the big discoveries is, you know what? You're really lousy at it. And even though I'm at the university and tell my students this, they say: "Oh, yeah, yeah. But not me! I can handle it. I can manage all these," which is, of course, a normal human impulse. So it's actually very scary....

....You're confident of that?

Yes. There's lots and lots of evidence. And that's just not our work. The demonstration that when you ask people to do two things at once they're less efficient has been demonstrated over and over and over. No one talks about it — I don't know why — but in fact there's no contradictory evidence to this for about the last 15, 20 years. Everything [as] simple as the little feed at the bottom of a news show, the little text, studies have shown that that distracts people. They remember both less. Studies on asking people to read something and at the same time listen to something show those effects. So there's really, in some sense, no surprise there. There's denial, but there's no surprise.

The surprise here is that what happens when you chronically multitask, you're multitasking all the time, and then you don't multitask, what we're finding is people are not turning off the multitasking switch in their [brain] — we think there's a switch in the brain; we don't know for sure — that says: "Stop using the things I do with multitasking. Focus. Be organized. Don't switch. Don't waste energy switching." And that doesn't seem to be turned off in people who multitask all the time.

Italics mine. So here's the thing: whether it's rude or not, multitasking is probably ruining your brain. You should stop. But if you can't do that, you should at least take frequent breaks where you're fully engaged in a single task and exercising your normal analytic abilities. So why not do that while you're socializing? It's as good a time as any.

Kevin Drum

Kevin Drum is a political blogger for Mother Jones.

For more of his stories, click here. RSS | Twitter

Tuesday, 29 April 2014

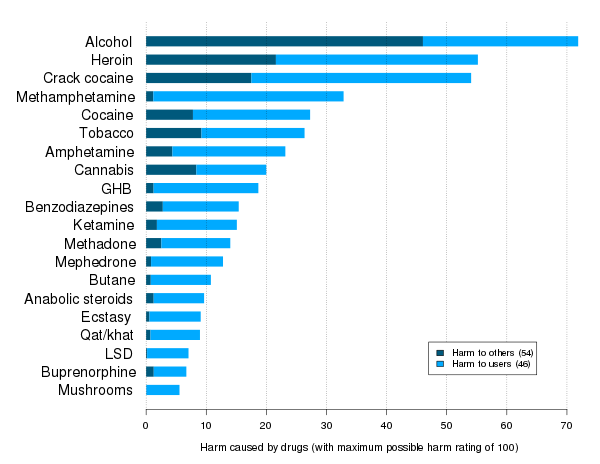

Which Drug would you like? Who's advice do you consider trustworthy?

Each different and different every time?

An Apple (or Statin) a Day

An Apple (or Statin) a Day Will Keep the Doctor Away: Population Health Analysis

In fact, according to the new analysis by British researchers, if individuals ate just one extra apple a day, approximately 8500 deaths from vascular disease could be prevented in the UK.

The reduction in vascular deaths by adding an apple to the diet is on par with the reduction that would be observed if all UK individuals over 50 years of age were prescribed statin therapy.

In that scenario, 9400 deaths from vascular disease could be prevented if these adults were started on simvastatin 40 mg.

"Statins and apples are both iconic," lead researcher Dr Adam Briggs (University of Oxford, UK) told heartwire . "An apple a day is known throughout the English-speaking world as a saying for health, and statins are now some of the most widely prescribed drugs in the world. So, when you now have a debate in the medical world about increasing the amount of statins prescribed for primary prevention, we wanted to look at what that would mean for population health and if there were other ways of doing it."

In the US, as reported by heartwire , the new American College of Cardiology/ American Heart Association (ACC/AHA) guidelines for the management of cholesterol suggest treating primary-prevention patients if they have an LDL-cholesterol level between 70 and 189 mg/dL and a 10-year risk of atherosclerotic cardiovascular disease >7.5%. In the UK, the guidelines are less aggressive and recommend statin therapy for primary prevention of cardiovascular disease if the 10-year risk score is >20%.

However, Briggs said there is debate in UK about expanding the use of statins to all patients 50 years of age or older.

"What we're trying to say from this analysis is that dietary changes initiated at the population level can have a really meaningful effect on population health," he said. "And second, so can increasing drug prescriptions. Now, we're not trying to say that people should be swapping their statins for apples; that's not where we're going. However, if they want to add an apple to that as part of disease prevention, then by all means do so, because you'll be further along in reducing your risk of fatal heart attacks and strokes."

The new report is published December 17, 2013 in the Christmas issue of BMJ. In addition to this "Comparative Proverb Assessment Modelling Study" by Briggs and colleagues, the lighthearted Christmas issue includes research that takes a longitudinal look at virgin births in the US to raise awareness about the need for sex education, while another "meta-narrative analysis" looks at why individuals follow bad celebrity medical advice.

Apples Are Both Nutritious and Delicious

To assess the potential benefits of putting more patients on statins, the researchers used data from the Cholesterol Treatment Trialists' meta-analysis that showed vascular mortality is reduced 12% for every 1.0-mmol/L reduction in LDL cholesterol. This was applied to the annual reduction in vascular mortality rates in UK individuals 50 years of age or older who were not currently taking a statin for primary disease prevention.

For the apple-a-day assessment, they used a widely published risk-assessment model (PRIME). This model includes a multitude of dietary variables, in which investigators can substitute different food choices to assess the effects on population health. The apple was assumed to weigh 100 g, and calorie intake was assumed to remain constant. This allowed investigators to assess the effect of substituting in one apple daily on vascular mortality in the UK population.

For statin therapy, offering the treatment to an extra 17 million individuals and assuming 70% compliance would prevent 9400 vascular deaths each year. Assuming 70% compliance with the apple, even though "apples are of course both delicious and nutritious," say the researchers, the estimated reduction in vascular deaths would be 8500.

They add that prescribing statins to all those eligible would lead to 1200 cases of myopathy, 200 cases of rhabdomyolysis, and 12 300 new diagnoses of diabetes mellitus.

Interestingly, the cost of statin therapy from the drug alone would be £180 million compared with £260 million for the apples. ( Is this correct? )

However, the authors point out that the National Health Services (NHS) might be able to negotiate apple price freezes ("although defrosted apples may not be so palatable," they add).

To heartwire , Briggs said he was surprised

at how well the apples compared with statin therapy. However, he stressed the

point is not to encourage patients to stop taking their medication.

Wednesday, 23 April 2014

Nootropics vs. Cognitive enhancement Giurgea's nootropic criteria:

Prevent Maintain Enhance

Nootropics vs. cognitive enhancers

Cognitive enhancers are drugs, supplements, nutraceuticals, and functional foods that enhance attentional control and memory.[5][6] Nootropics are cognitive enhancers that are neuroprotective or extremely nontoxic. Nootropics (such as Modafinil) are by definition cognitive enhancers, but a cognitive enhancer is not necessarily a nootropic.

Giurgea's nootropic criteria:

- Enhances learning and memory.

- Enhances learned behaviors under conditions which are known to disrupt them (e.g. hypoxia, sleep deprivation).

- Protects the brain from physical or chemical injury.

- Enhances the tonic cortical/subcortical control mechanisms

- Exhibits few side effects and extremely low toxicity, while lacking the pharmacology of typical psychotropic drugs (motor stimulation, sedation, etc.).

Skondia's nootropic criteria:

I. No direct vasoactivity

- A. No vasodilation

- B. No vasoconstriction

- A. Quantitative EEG: Increased power spectrum (beta 2 and alpha)

- B. Qualitative EEG: Decreased delta waves and cerebral suffering

- A. Under normal conditions

- B. Under pathological conditions

- A. Animal brain metabolism

- 1. Molecular

- 2. Physiopathological

- B. Human brain metabolism (clinical evaluation)

- 1. A-V differences

- a. Increased extraction quotients of O2

- b. Increased extraction quotients of glucose

- c. Reduced lactate pyruvate ratio

- 2. Regional cerebral metabolic rates (rCMR)

- a. Increased ICMR of O2

- b. Increased rCMR of glucose

- 3. Regional cerebral blood flow: Normalization

- 1. A-V differences

VI. Clinical trials must be conducted with several rating scales designed to objectify metabolic cerebral improvement.

Pass the Vioxx. Taking ownership for our own health.

More Drugs Added to FDA Watch List

The agency received signals of these possible safety issues through its FDA Adverse Event Reporting System (FAERS) database in the last 3 months of 2013.

On the basis of the FAERS data, the FDA said certain kinase inhibitor products that thwart vascular endothelial growth factor, depriving malignant tumors of new blood vessels, may be associated with bullous skin conditions such as Stevens-Johnson syndrome and toxic epidermal necrolysis.

The cancer drugs in question are lapatinib (Tykerb, GlaxoSmithKline), pazopanib (Votrient, GlaxoSmithKline), sorafenib (Nexavar, Bayer HealthCare Pharmaceuticals), and sunitinib (Sutent, Pfizer).

In the case of the ADHD drug — certain generic versions of methylphenidate hydrochloride (Concerta, Janssen Pharmaceuticals) — the issue is a possible lack of therapeutic effect, which may be linked to product quality issues, according to the FDA.

A drug's appearance on the list, which grows quarter by quarter, does not mean the FDA has concluded that the drug actually poses the health risk reported through FAERS. Instead, the agency is studying whether there is indeed a causal link. If it establishes one, the FDA then would consider a regulatory response such as gathering more information to better describe the risk, changing the drug's label, or mandating a risk evaluation and mitigation strategy.

The FDA also emphasizes that it is not suggesting that clinicians should stop prescribing watch list drugs or that patients should stop taking them while the jury is out.

Potential Signals of Serious Risks/New Safety Information Identified by FAERS, October–December 2013

Product Name: Active Ingredient

(Trade) or Product Class

|

Potential Signal of a Serious Risk/New

Safety Information

|

Additional Information (as of March 1, 2014)

|

Certain kinase inhibitor products with

vascular endothelial growth factor–inhibiting properties: Lapatinib (Tykerb),

Pazopanib (Votrient), Sorafenib (Nexavar), and Sunitinib (Sutent)

|

Bullous skin conditions including

Stevens-Johnson syndrome and toxic epidermal necrolysis

|

The FDA is continuing to evaluate these issues

to determine the need for any regulatory action.

|

Certain methylphenidate hydrochloride

extended-release tablets (generic products for the trade name Concerta)

|

Lack of therapeutic effect, possibly

related to product quality issues

|

The FDA is continuing to evaluate this

issue to determine the need for any regulatory action

|

Ever Questioned a Research Paper. Start Too.It's more interesting than you may believe. Agenda? Real Paper? Peer reviewed? Methodology? Transparency?

Area Study; Processed Probiotic Drink May Not Prevent Antibiotic-Associated Diarrhea

They used a processed food here, instead of an actual live kefir grain generated raw product. Real kefir product has been consumed for thousands of years.

August 17, 2009

"The promise of using functional foods to mitigate disease and promote health is one of the major reasons so many resources are being placed in this exciting new field," write Daniel J. Merenstein, MD, from Georgetown University Medical Center in Washington, DC, and colleagues from the Measuring the Influence of Kefir (MILK) Study. "Kefir grains constitute both lactose-fermenting yeasts (Kluyveromyces marxianus) and non–lactose-fermenting yeasts (Saccharomyces unisporus, Saccharomyces cerevisiae, and Saccharomyces exiguus). It is believed that these probiotics deliver beneficial bacteria to the gut, improving gastrointestinal health, and may protect against [antibiotic-associated diarrhea]."

The goal of this study was to evaluate the effect of a commercially available kefir product (Probugs, Lifeway Foods, Inc) on the prevention of antibiotic-associated diarrhea among 125 children aged 1 to 5 years and presenting to primary care clinicians in the Washington, DC, metropolitan area. Participants were randomly assigned to receive kefir drink or heat-killed matching placebo, and the main study endpoint was the incidence of diarrhea during the 14-day follow-up period after children were given antibiotics.

Rates of diarrhea did not differ significantly between groups (18% in the kefir group vs 21.9% in the placebo group; relative risk, 0.82; 95% confidence interval, 0.54 – 1.43), nor did secondary outcomes differ between groups. The investigators noted some interesting interactions among initial health at enrollment and participant age and sex, suggesting the need for additional study.

"In our trial, kefir did not prevent [antibiotic-associated diarrhea]," the study authors write. "Further independent research on the potential of kefir needs to be conducted."

"It is important to recognize that this trial studied specific strains at specific dosages and our findings cannot be extrapolated for other strains or outcomes," the study authors conclude. "There are some intriguing data that we believe deserve further elucidation and may hold promise for kefir's role in [antibiotic-associated diarrhea] prevention. We also believe that it is important that commercial products continue to be independently studied and subjected to high-quality research techniques, as many products appear to present themselves as a panacea while lacking patient-oriented outcome data."

Lifeway Foods, Inc, the maker of Probugs, supported this study and provided study drinks. The study authors have disclosed no relevant financial relationships.

Arch Pediatr Adolesc Med. 2009;163:750–754.

Do no harm! Well, not too much harm. 225,000 deaths per year have iatrogenic causes

What's that you say. It wasn't idiopathic but Iatrogenic!

Coming soon, watchful waiting.

Iatrogenesis or iatrogenic effect, (/aɪˌætroʊˈdʒɛnɪk/; "originating from a physician") is preventable harm resulting from medical treatment or advice to patients. Professionals who may sometimes cause harm to patients are: physicians; pharmacists; nurses; dentists, psychiatrists, psychologists, and therapists. Iatrogenesis can also result from complementary and alternative medicine treatments.In the United States an estimated 225,000 deaths per year have iatrogenic causes, with only heart disease and cancer causing more deaths.[1]

Subscribe to:

Posts (Atom)